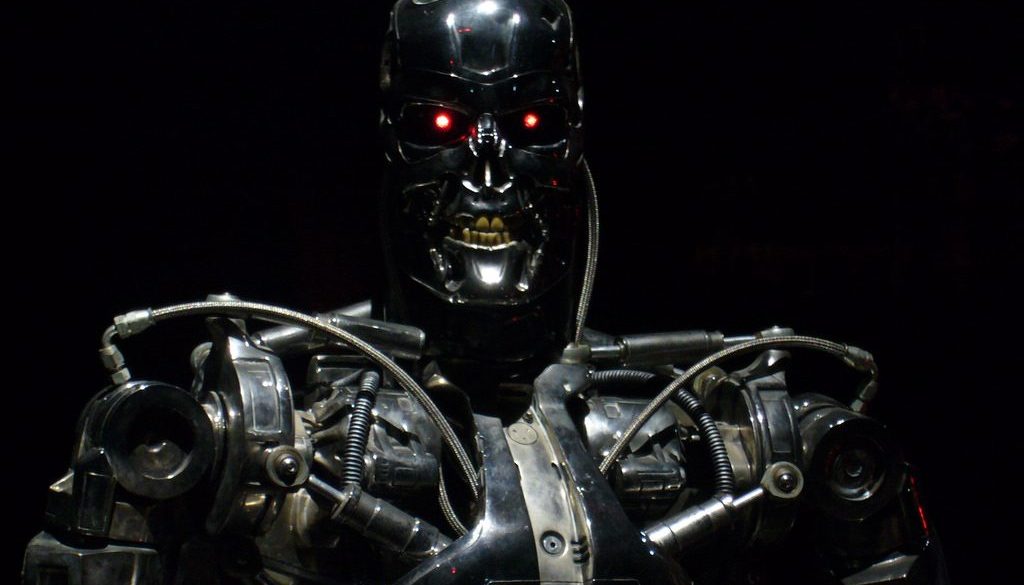

Artificial Intelligence Scary? It doesn’t have to be.

A concern we often hear regarding the rise of Artificial Intelligence is that it may one day take over humanity. In fact, many people seem to skip several steps of critical thinking and just assume this hostile takeover is an unavoidable eventuality. After all, there’s no time to reflect with the pending cyborg army attack.

But wait–before we mindlessly turn to the government for a solution (which is all too often an actual eventuality), let’s take a moment to reflect on the premises leading to this hasty conclusion.

Intelligent is as intelligent does

Human history has no shortage of people committing heinous acts of violence toward one another in the name of amassing power for themselves. The Stanford prison experiment shows that these tendencies are not limited to dictators with villainous mustaches, but can also be found in everyday people in the right (or wrong) circumstances.

With this in mind, there can certainly be reasonable concern about the path AI could take, when the people developing it have the potential for violent domination of others. If you’re developing AI and you also happen to be a tyrannical dictator (or work for one), the chances are your tyrannical values might make their way into your programming. Sadly, in accordance with Murphy’s Law, this will probably happen at some point.

Fortunately for our future selves and generations to come, the parameters of AI’s values are not limited to our current imaginative capacity. Just because we fear that AI might mimic the ugly human traits of self-preservation at all costs and domination of others, this doesn’t mean AI won’t be developed which is free of these faults.

For example, a voluntarist AI developer has no desire to dominate anyone or to trample upon others to enrich themselves, and would in fact be very likely to take considerable measures to ensure that all programming would be void of any such values.

“What if you’re wrong, and AI evolves to a point where it can dominate all of humanity?”

The most successful operations have proven time after time to be those that operate in higher levels of voluntary cooperation and lower levels of force. To assume that this observation would be lost on a super-intelligent being is a bit presumptive.

“To be on the safe side, let’s at least get the government involved before it’s too late, right?”

Probably the most dangerous path that civilization can take with AI–or any potential source of power for that matter–is to hand it over to government control. A quick look back at the past century reveals exactly what we should expect the US military to do with such technology: the mechanization of war starting in WWI, the use of nuclear power in WWII, countless chemical agents deployed in the Far East and Middle Eastern wars, and drone weaponry utilized in recent attacks. Assuming that new tech is best left under the control of the government is far more dangerous than wishful thinking–it’s a proven recipe for disaster.

Further, once regulatory power is given to the government (which will invariably sanction military use of AI), the sector that will suffer most is the AI initiatives for good.

Consider a company called “AI For Good” whose stated mission and sole purpose is to develop good AI which protect people from the bad AI developed by the military for destructive purposes. Government regulatory bodies are not going to make life easy on AI For Good to say the least, as the company would be seen as working in direct opposition to the government’s own AI initiatives. Handing over regulatory power to the government will ensure that funding and permissions will be funneled to those organizations with the most powerful incentives and tendencies to destroy.

“What’s the point? We’re all just existing in an AI simulation anyway.”

Although certainly possible, this notion is often cited more for its good soundbite appeal than as a conclusion based on real consideration. The first unaddressed factor is the possibility of technological limitation: that it may simply not be possible to ever get to the point where a simulation can appear and feel as real to the participants (us Earthlings) as is necessary for what we call our existence. Assuming that technology has no limitations can be reckless thinking.

Secondly, the simulation “conclusion” dismisses the Objectivist possibility that reality exists. While our reality may indeed be a simulation, this claim warrants thinking beyond the fallacious logic of “I imagine it could be just a simulation, therefore it probably is.”

Conclusion

The best and most constructive AI development will likely come from minds that are free of statist, “top-down power” thinking. There’s no reason why some people’s inability to escape the paradigm of our own human tendencies should warrant limitations on others who are not trapped in that way of thinking. Technology has the potential to bypass the troubling realities of human nature. We should be careful to not anthropomorphize AI and assume that the bloodlust and greed that has plagued humanity will have similar influence on the digital world.

Imagine how poorly we might have fared in the ongoing battle against computer viruses if the government had identified their risk twenty years ago and subsequently implemented strict restrictions on private development of any malware-related development. We’re probably far better off with an actively responsive, private anti-virus software industry.

And if AI does go south, the last thing we’ll want is to be left with no one developing beneficial AI to counter the AI created by those who brought us such technological gems as modern warfare and the PRISM program.